Explainable Artificial Intelligence: The Key to Trustworthy AI Systems

Artificial Intelligence (AI) has revolutionized industries, from healthcare to finance, by enabling machines to perform complex tasks with remarkable accuracy. However, as AI systems become more advanced, a critical question arises: Can we trust decisions made by AI if we don’t understand how they were reached? This is where Explainable Artificial Intelligence (XAI) comes into play. XAI aims to make AI systems transparent, interpretable, and accountable, ensuring that their decisions can be understood by humans.

In this article, we’ll explore the concept of Explainable AI, its importance, and how it’s shaping the future of technology. By the end, you’ll understand why XAI is essential for building trust in AI systems and how it can be applied in real-world scenarios.

What is Explainable Artificial Intelligence?

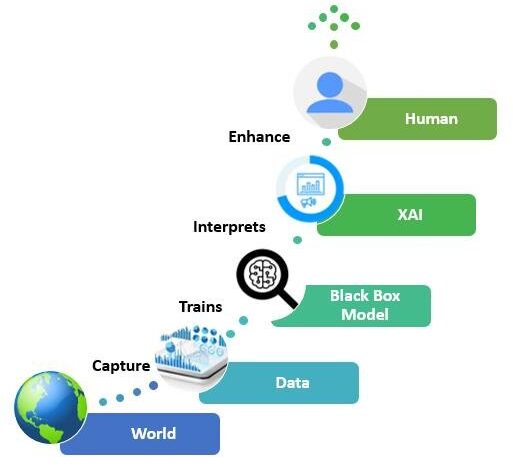

Explainable Artificial Intelligence (XAI) refers to AI systems designed to provide clear, understandable explanations for their decisions and actions. Unlike traditional “black-box” AI models, which operate in ways that are difficult to interpret, XAI focuses on transparency and accountability.

Key Features of XAI:

- Transparency: The ability to see how an AI system processes data and arrives at decisions.

- Interpretability: The capacity to explain AI outputs in human-understandable terms.

- Accountability: Ensuring that AI systems can justify their actions, especially in critical applications like healthcare or law enforcement.

For example, in healthcare, an XAI system can explain why it diagnosed a patient with a specific condition, highlighting the key factors that influenced its decision. This not only builds trust but also helps doctors make informed decisions.

Why is Explainable AI Important?

The importance of XAI cannot be overstated, especially as AI systems are increasingly used in high-stakes environments. Here’s why XAI matters:

1. Building Trust in AI Systems

Trust is the foundation of any technology adoption. When users understand how an AI system works, they are more likely to trust its decisions. For instance, a bank using XAI to approve or reject loan applications can explain the reasoning behind each decision, reducing customer frustration and increasing transparency.

2. Ensuring Ethical AI Practices

AI systems can inadvertently perpetuate biases present in their training data. XAI helps identify and address these biases by making the decision-making process visible. This is crucial for ensuring fairness and equity in AI applications.

3. Compliance with Regulations

Governments and regulatory bodies are increasingly mandating transparency in AI systems. For example, the European Union’s General Data Protection Regulation (GDPR) requires that individuals have the right to an explanation for automated decisions affecting them. XAI enables organizations to comply with such regulations.

Applications of Explainable AI

XAI is transforming industries by making AI systems more reliable and understandable. Here are some real-world applications:

1. Healthcare

In healthcare, XAI is used to explain diagnostic decisions, recommend treatments, and predict patient outcomes. For instance, an XAI system can explain why it flagged a particular X-ray as abnormal, helping doctors verify its accuracy.

2. Finance

Banks and financial institutions use XAI to detect fraud, assess credit risk, and automate trading. By explaining its decisions, XAI ensures that financial processes are transparent and compliant with regulations.

3. Autonomous Vehicles

Self-driving cars rely on AI to make split-second decisions. XAI can explain why a vehicle chose to brake suddenly or change lanes, enhancing safety and public trust.

Challenges in Implementing Explainable AI

While XAI offers numerous benefits, implementing it is not without challenges:

1. Complexity of AI Models

Advanced AI models, such as deep neural networks, are inherently complex. Simplifying these models for interpretability can sometimes reduce their accuracy.

2. Balancing Transparency and Performance

There’s often a trade-off between making an AI system explainable and maintaining its performance. Striking the right balance is a key challenge for developers.

3. Lack of Standardization

Currently, there are no universal standards for XAI, making it difficult to compare and evaluate different systems.

The Future of Explainable AI

The future of XAI looks promising, with ongoing research and development aimed at overcoming its challenges. Here’s what to expect:

1. Integration with AI Development Frameworks

Major AI frameworks, such as TensorFlow and PyTorch, are incorporating XAI tools to make explainability a standard feature.

2. Advancements in Visualization Techniques

New visualization tools are being developed to make AI decisions more intuitive and easier to understand.

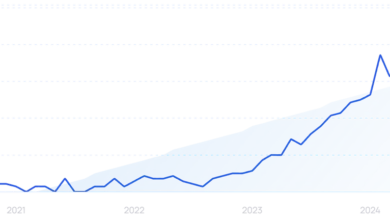

3. Increased Adoption Across Industries

As the benefits of XAI become more apparent, its adoption is expected to grow across sectors, from education to retail.

Conclusion

Explainable Artificial Intelligence is not just a technical requirement; it’s a necessity for building trust, ensuring ethical practices, and complying with regulations. By making AI systems transparent and interpretable, XAI empowers users to understand and trust the technology that’s shaping our world.

As AI continues to evolve, the importance of XAI will only grow. Whether you’re a developer, business leader, or end-user, understanding XAI is crucial for navigating the future of AI responsibly.

Call to Action: Ready to explore how Explainable AI can benefit your organization? Download our free guide to XAI implementation and take the first step toward building trustworthy AI systems.

One Comment